I had to cluster 2 VM's in ESXi 5.1, for a certain application, not your run-of-the-mill activity, but it happens. Here are the steps below

First off, we install the VM's, then we need to set up the clustering.

You will need the following RPM's:

- cluster-glue-libs-1.0.5-2.el6.x86_64.rpm

- clusterlib-3.0.12.1-23.el6.x86_64.rpm

- cman-3.0.12.1-23.el6.x86_64.rpm

- corosync-1.4.1-4.el6.x86_64.rpm

- corosynclib-1.4.1-4.el6.x86_64.rpm

- fence-agents-3.1.5-10.el6.x86_64.rpm

- fence-virtd-0.2.3-5.el6.x86_64.rpm

- fence-virtd-libvirt-0.2.3-5.el6.x86_64.rpm

- history.rpm

- ipmitool-1.8.11-12.el6.x86_64.rpm

- libibverbs-1.1.5-3.el6.x86_64.rpm

- librdmacm-1.0.14.1-3.el6.x86_64.rpm

- libtool-2.2.6-15.5.el6.x86_64.rpm

- libtool-ltdl-2.2.6-15.5.el6.x86_64.rpm

- libvirt-0.9.4-23.el6.x86_64.rpm

- libvirt-client-0.9.4-23.el6.x86_64.rpm

- luci-0.23.0-32.el6.x86_64.rpm

- modcluster-0.16.2-14.el6.x86_64.rpm

- nss-tools-3.12.10-16.el6.x86_64.rpm

- oddjob-0.30-5.el6.x86_64.rpm

- openais-1.1.1-7.el6.x86_64.rpm

- openaislib-1.1.1-7.el6.x86_64.rpm

- perl-Net-Telnet-3.03-11.el6.noarch.rpm

- pexpect-2.3-6.el6.noarch.rpm

- python-formencode-1.2.2-2.1.el6.noarch.rpm

- python-paste-1.7.4-1.el6.noarch.rpm

- python-repoze-who-1.0.13-2.el6.noarch.rpm

- python-repoze-who-friendlyform-1.0-0.3.b3.el6.noarch.rpm

- python-setuptools-0.6.10-3.el6.noarch.rpm

- python-suds-0.4.1-3.el6.noarch.rpm

- python-toscawidgets-0.9.8-1.el6.noarch.rpm

- python-tw-forms-0.9.9-1.el6.noarch.rpm

- python-webob-0.9.6.1-3.el6.noarch.rpm

- python-zope-filesystem-1-5.el6.x86_64.rpm

- python-zope-interface-3.5.2-2.1.el6.x86_64.rpm

- resource-agents-3.9.2-7.el6.x86_64.rpm

- rgmanager-3.0.12.1-5.el6.x86_64.rpm

- ricci-0.16.2-43.el6.x86_64.rpm

- samba-client-3.5.10-114.el6.x86_64.rpm

- sg3_utils-1.28-4.el6.x86_64.rpm

- TurboGears2-2.0.3-4.el6.noarch.rpm

I copied all these RPM's to a folder, and ran the commands from there. You will also need to install all the dependencies, which will depend on your system. This particular VM was pretty stripped down, so I needed to do the following using yum, and the rest with straight RPM install. (You could write down all the dependencies yum installs, but I didn't)

yum install libvirt-client-0.9.4-23.el6.x86_64

yum install sg3_utils

yum install libvirt

yum install fence-agents

yum install fence-virt

yum install TurboGears2

yum install samba-3.5.10-114.el6.x86_64

yum install cifs-utils-4.8.1-5.el6.x86_64

rpm -ivh perl*

rpm -ivh python*

rpm -ivh python-repoze-who-friendlyform-1.0-0.3.b3.el6.noarch.rpm

rpm -ivh python-tw-forms-0.9.9-1.el6.noarch.rpm

rpm -ivh fence-virtd-0.2.3-5.el6.x86_64.rpm

rpm -ivh libibverbs-1.1.5-3.el6.x86_64.rpm

rpm -ivh librdmacm-1.0.14.1-3.el6.x86_64.rpm

rpm -ivh corosync*

rpm -ivh libtool-ltdl-2.2.6-15.5.el6.x86_64.rpm

rpm -ivh cluster*

rpm -ivh openais*

rpm -ivh pexpect-2.3-6.el6.noarch.rpm

rpm -ivh ricci-0.16.2-43.el6.x86_64.rpm

rpm -ivh oddjob-0.30-5.el6.x86_64.rpm

rpm -ivh nss-tools-3.12.10-16.el6.x86_64.rpm

rpm -ivh oddjob-0.30-5.el6.x86_64.rpm

rpm -ivh ipmitool-1.8.11-12.el6.x86_64.rpm

rpm -ivh fence-virtd-libvirt-0.2.3-5.el6.x86_64.rpm

rpm -ivh libvirt-0.9.4-23.el6.x86_64.rpm

rpm -ivh modcluster-0.16.2-14.el6.x86_64.rpm

rpm -ivh ricci-0.16.2-43.el6.x86_64.rpm

rpm -ivh fence*

rpm -ivh cman-3.0.12.1-23.el6.x86_64.rpm

rpm -ivh luci-0.23.0-32.el6.x86_64.rpm

rpm -ivh resource-agents-3.9.2-7.el6.x86_64.rpm

rpm -ivh rgmanager-3.0.12.1-5.el6.x86_64.rpm

Now you want to add in a /etc/cluster/cluster.conf file, which would look like this:

<?xml version="1.0"?>

<cluster config_version="3" name="name-of-cluster">

<clusternodes>

<clusternode name="vm01" nodeid="1">

<fence>

<method name="VMWare_Vcenter_SOAP">

<device name="vcenter" port="DATACENTER/FOLDER/VM01" ssl="on"/>

</method>

</fence>

</clusternode>

<clusternode name="vm02" nodeid="2">

<fence>

<method name="VMWare_Vcenter_SOAP">

<device name="vcenter" port="DATACENTER/FOLDER/VM02" ssl="on"/>

</method>

</fence>

</clusternode>

</clusternodes>

<cman expected_votes="1" transport="udpu" two_node="1"/>

<rm>

<failoverdomains>

<failoverdomain name="failoverdomain-1" nofailback="1" ordered="0" restricted="0">

<failoverdomainnode name="VM01"/>

<failoverdomainnode name="VM02"/>

</failoverdomain>

</failoverdomains>

<resources>

<ip address="10.10.18.137" monitor_link="on" sleeptime="2"/>

</resources>

<service domain="failoverdomain-1" name="HTTP_service" recovery="relocate">

<script file="/etc/init.d/httpd" name="httpd"/>

<ip ref="10.10.18.137"/>

</service>

</rm>

<fencedevices>

<fencedevice agent="fence_vmware_soap" ipaddr="10.1.9.10" login="svc.rhc.fencer.la1" name="vcenter" passwd="chooseagoodpassword"/>

</fencedevices>

<fence_daemon post_join_delay="20"/>

<logging debug="on" syslog_priority="debug">

<logging_daemon debug="on" name="corosync"/>

</logging>

</cluster>

This configuration file will just monitor a simple httpd service, you can then put whatever service you actually need clustered.

** Make sure all the DNS is working properly, and the /etc/hosts file is updated and the order is hosts,bind in /etc/host.conf.

Now let's start all the services and make sure they come on at boot time:

service cman start

service rgmanager start

service modclusterd start

chkconfig cman on

chkconfig rgmanager on

chkconfig modclusterd on

Next we have to take care of a VMware user that only has rights to start/stop the VM's, that's the user/pass you are referencing in the cluster.conf file.

* Note - if you have VMware authenticating against an Active Directory server, you need a specific user there. In VMware 4.x that can be any user, and you assign the roles as below. However in Vmware 5.x which adds SSO (Single Sign On) the Active Directory user now has to have certain rights (which I will fill in later in the blog) otherwise it will not work.

Next we want to create rules on the vCenter that both VM's dont end up on the same host, thereby killing our clustering efforts if a VMware host goes down.

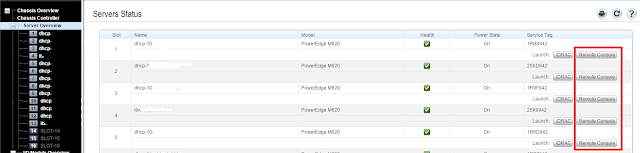

So first we will get the cluster settings as below:

and now go to vSphere DRS, and to the rules under there:

I will call the rule whatever name I want, and choose the VM's that are affected by this rule, and choose what to do with them, in this case I choose to separate them.

Your rules will now look like this:

In order to allow fence_vmware_soap to work, the configured vCenter user account needs to belong to a role with the following four permissions set in vSphere:

System.AnonymousSystem.ViewVirtualMachine.Interact.PowerOffVirtualMachine.Interact.PowerOn

Next, we will create a role, that is only allowed to start, stop, reset and suspend the VM's:

1) Go to "Home" => "Administration" => "Roles" => "[vSphere server name]"

2) Right-click in the left frame and select "Add..."

3) Name this role in any way, e.g: "RHEL-HA fence_vmware_soap"

4) Under "Privileges", expand the tree "All Privileges" => "Virtual machine" => "Interaction"

5) Check both the "Power Off" and "Power On" boxes

6) Press the "OK" button

7) Then associate this role with the user/group they want running fence_vmware_soap.

Under there choose just the power on/off suspend/reset under Interaction and under Virtual Machine

Then, we will create a service account, let's call it

svc.la1, this could be done locally in the VMware database, or in Active Directory or any other LDAP server you use to authenticate.

We will then look up that user, in this case the domain is "SS" and we look for that user, choose it, and click on "Add"

it will then look like this:

we will then test to see that it works:

[root@bc03 ~]#

fence_vmware_soap --ip 10.10.10.10 --username "USER YOU CREATED" --password "PASSWORD" -z -U 4211547e-65df-2a65-7e17-d1e731187fdd --action="reboot"

Btw, you can get the UUID of the machine by using PowerShell like this:

PowerCLI> Get-VM "NAME_OF_VM" | %{(Get-View $_.Id).config.uuid}

if it doesn't work, it will say something like this:

[root@bc03 ~]# fence_vmware_soap --ip 10.10.10.10 --username "svc.rhc.local" --password "password" -z --action reboot -U "4211547e-65df-2a65-7e17-d1e731187fdd"

No handlers could be found for logger "suds.client"

Unable to connect/login to fencing device

[root@bc03 ~]#

(I'm running this command from the other VM, BC03, as to fence the other node and not myself)

The first line is just a python message that say's that the script doesn't have logging capabilities, however the 2nd message is saying that there's an authentication problem. You can confirm this by looking at the vCenter server logs, at C:\ProgramData\VMware\VMware VirtualCenter\Logs\ or by creating an "Admin" user on AD and see if it then works (see my note above)